A code generator abstract base class. More...

#include <CodeGen_LLVM.h>

Classes | |

| struct | AllEnabledMask |

| Type indicating mask to use is all true – all lanes enabled. More... | |

| struct | Intrinsic |

| Description of an intrinsic function overload. More... | |

| struct | NoMask |

| Type indicating an intrinsic does not take a mask. More... | |

| struct | ScopedFastMath |

| If any_strict_float is true, sets fast math flags for the lifetime of this object, then sets them to strict on destruction. More... | |

| struct | VPArg |

| Support for generating LLVM vector predication intrinsics ("@llvm.vp.*" and "@llvm.experimental.vp.*") More... | |

| struct | VPResultType |

Public Member Functions | |

| virtual std::unique_ptr< llvm::Module > | compile (const Module &module) |

| Takes a halide Module and compiles it to an llvm Module. | |

| const Target & | get_target () const |

| The target we're generating code for. | |

| void | set_context (llvm::LLVMContext &context) |

| Tell the code generator which LLVM context to use. | |

| size_t | get_requested_alloca_total () const |

| Public Member Functions inherited from Halide::Internal::IRVisitor | |

| IRVisitor ()=default | |

| virtual | ~IRVisitor ()=default |

Static Public Member Functions | |

| static std::unique_ptr< CodeGen_LLVM > | new_for_target (const Target &target, llvm::LLVMContext &context) |

| Create an instance of CodeGen_LLVM suitable for the target. | |

| static void | initialize_llvm () |

| Initialize internal llvm state for the enabled targets. | |

| static std::unique_ptr< llvm::Module > | compile_trampolines (const Target &target, llvm::LLVMContext &context, const std::string &suffix, const std::vector< std::pair< std::string, ExternSignature > > &externs) |

Protected Types | |

| enum | DestructorType { Always , OnError , OnSuccess } |

| Some destructors should always be called. More... | |

| enum class | VectorTypeConstraint { None , Fixed , VScale } |

| Interface to abstract vector code generation as LLVM is now providing multiple options to express even simple vector operations. More... | |

| enum class | WarningKind { EmulatedFloat16 } |

| Warning messages which we want to avoid displaying number of times. More... | |

| using | MaskVariant = std::variant<NoMask, AllEnabledMask, llvm::Value *> |

| Predication mask using the above two types for special cases and an llvm::Value for the general one. | |

Protected Member Functions | |

| CodeGen_LLVM (const Target &t) | |

| virtual void | compile_func (const LoweredFunc &func, const std::string &simple_name, const std::string &extern_name) |

| Compile a specific halide declaration into the llvm Module. | |

| virtual void | compile_buffer (const Buffer<> &buffer) |

| virtual void | begin_func (LinkageType linkage, const std::string &simple_name, const std::string &extern_name, const std::vector< LoweredArgument > &args) |

| Helper functions for compiling Halide functions to llvm functions. | |

| virtual void | end_func (const std::vector< LoweredArgument > &args) |

| virtual std::string | mcpu_target () const =0 |

| What should be passed as -mcpu (warning: implies attrs!), -mattrs, and related for compilation. | |

| virtual std::string | mcpu_tune () const =0 |

| virtual std::string | mattrs () const =0 |

| virtual std::string | mabi () const |

| virtual bool | use_soft_float_abi () const =0 |

| virtual bool | use_pic () const |

| virtual bool | promote_indices () const |

| Should indexing math be promoted to 64-bit on platforms with 64-bit pointers? | |

| virtual int | native_vector_bits () const =0 |

| What's the natural vector bit-width to use for loads, stores, etc. | |

| virtual int | maximum_vector_bits () const |

| Used to decide whether to break a vector up into multiple smaller operations. | |

| virtual int | target_vscale () const |

| For architectures that have vscale vectors, return the constant vscale to use. | |

| virtual Type | upgrade_type_for_arithmetic (const Type &) const |

| Return the type in which arithmetic should be done for the given storage type. | |

| virtual Type | upgrade_type_for_storage (const Type &) const |

| Return the type that a given Halide type should be stored/loaded from memory as. | |

| virtual Type | upgrade_type_for_argument_passing (const Type &) const |

| Return the type that a Halide type should be passed in and out of functions as. | |

| void | set_fast_fp_math () |

| Change floating-point math op emission to use fast flags. | |

| void | set_strict_fp_math () |

| Change floating-point math op emission to use strict flags. | |

| virtual void | init_context () |

| Grab all the context specific internal state. | |

| virtual void | init_module () |

| Initialize the CodeGen_LLVM internal state to compile a fresh module. | |

| void | optimize_module () |

| Run all of llvm's optimization passes on the module. | |

| void | sym_push (const std::string &name, llvm::Value *value) |

| Add an entry to the symbol table, hiding previous entries with the same name. | |

| void | sym_pop (const std::string &name) |

| Remove an entry for the symbol table, revealing any previous entries with the same name. | |

| llvm::Value * | sym_get (const std::string &name, bool must_succeed=true) const |

| Fetch an entry from the symbol table. | |

| bool | sym_exists (const std::string &name) const |

| Test if an item exists in the symbol table. | |

| llvm::FunctionType * | signature_to_type (const ExternSignature &signature) |

| Given a Halide ExternSignature, return the equivalent llvm::FunctionType. | |

| llvm::Value * | codegen (const Expr &) |

| Emit code that evaluates an expression, and return the llvm representation of the result of the expression. | |

| void | codegen (const Stmt &) |

| Emit code that runs a statement. | |

| void | scalarize (const Expr &) |

| Codegen a vector Expr by codegenning each lane and combining. | |

| llvm::Value * | register_destructor (llvm::Function *destructor_fn, llvm::Value *obj, DestructorType when) |

| void | trigger_destructor (llvm::Function *destructor_fn, llvm::Value *stack_slot) |

| Call a destructor early. | |

| llvm::BasicBlock * | get_destructor_block () |

| Retrieves the block containing the error handling code. | |

| void | create_assertion (llvm::Value *condition, const Expr &message, llvm::Value *error_code=nullptr) |

| Codegen an assertion. | |

| void | codegen_asserts (const std::vector< const AssertStmt * > &asserts) |

| Codegen a block of asserts with pure conditions. | |

| void | return_with_error_code (llvm::Value *error_code) |

| Return the the pipeline with the given error code. | |

| llvm::Constant * | create_string_constant (const std::string &str) |

| Put a string constant in the module as a global variable and return a pointer to it. | |

| llvm::Constant * | create_binary_blob (const std::vector< char > &data, const std::string &name, bool constant=true) |

| Put a binary blob in the module as a global variable and return a pointer to it. | |

| llvm::Value * | create_broadcast (llvm::Value *, int lanes) |

| Widen an llvm scalar into an llvm vector with the given number of lanes. | |

| llvm::Value * | codegen_buffer_pointer (const std::string &buffer, Type type, llvm::Value *index) |

| Generate a pointer into a named buffer at a given index, of a given type. | |

| llvm::Value * | codegen_buffer_pointer (const std::string &buffer, Type type, Expr index) |

| llvm::Value * | codegen_buffer_pointer (llvm::Value *base_address, Type type, Expr index) |

| llvm::Value * | codegen_buffer_pointer (llvm::Value *base_address, Type type, llvm::Value *index) |

| std::string | mangle_llvm_type (llvm::Type *type) |

| Return type string for LLVM type using LLVM IR intrinsic type mangling. | |

| llvm::Value * | make_halide_type_t (const Type &) |

| Turn a Halide Type into an llvm::Value representing a constant halide_type_t. | |

| void | add_tbaa_metadata (llvm::Instruction *inst, std::string buffer, const Expr &index) |

| Mark a load or store with type-based-alias-analysis metadata so that llvm knows it can reorder loads and stores across different buffers. | |

| virtual std::string | get_allocation_name (const std::string &n) |

| Get a unique name for the actual block of memory that an allocate node uses. | |

| void | function_does_not_access_memory (llvm::Function *fn) |

| Add the appropriate function attribute to tell LLVM that the function doesn't access memory. | |

| void | visit (const IntImm *) override |

| Generate code for various IR nodes. | |

| void | visit (const UIntImm *) override |

| void | visit (const FloatImm *) override |

| void | visit (const StringImm *) override |

| void | visit (const Cast *) override |

| void | visit (const Reinterpret *) override |

| void | visit (const Variable *) override |

| void | visit (const Add *) override |

| void | visit (const Sub *) override |

| void | visit (const Mul *) override |

| void | visit (const Div *) override |

| void | visit (const Mod *) override |

| void | visit (const Min *) override |

| void | visit (const Max *) override |

| void | visit (const EQ *) override |

| void | visit (const NE *) override |

| void | visit (const LT *) override |

| void | visit (const LE *) override |

| void | visit (const GT *) override |

| void | visit (const GE *) override |

| void | visit (const And *) override |

| void | visit (const Or *) override |

| void | visit (const Not *) override |

| void | visit (const Select *) override |

| void | visit (const Load *) override |

| void | visit (const Ramp *) override |

| void | visit (const Broadcast *) override |

| void | visit (const Call *) override |

| void | visit (const Let *) override |

| void | visit (const LetStmt *) override |

| void | visit (const AssertStmt *) override |

| void | visit (const ProducerConsumer *) override |

| void | visit (const For *) override |

| void | visit (const Store *) override |

| void | visit (const Block *) override |

| void | visit (const IfThenElse *) override |

| void | visit (const Evaluate *) override |

| void | visit (const Shuffle *) override |

| void | visit (const VectorReduce *) override |

| void | visit (const Prefetch *) override |

| void | visit (const Atomic *) override |

| void | visit (const Allocate *) override=0 |

| Generate code for an allocate node. | |

| void | visit (const Free *) override=0 |

| Generate code for a free node. | |

| void | visit (const Provide *) override |

| These IR nodes should have been removed during lowering. | |

| void | visit (const Realize *) override |

| virtual llvm::Type * | llvm_type_of (const Type &) const |

| Get the llvm type equivalent to the given halide type in the current context. | |

| llvm::Type * | llvm_type_of (llvm::LLVMContext *context, Halide::Type t, int effective_vscale) const |

| Get the llvm type equivalent to a given halide type. | |

| llvm::Value * | create_alloca_at_entry (llvm::Type *type, int n, bool zero_initialize=false, const std::string &name="") |

| Perform an alloca at the function entrypoint. | |

| llvm::Value * | get_user_context () const |

| The user_context argument. | |

| virtual llvm::Value * | interleave_vectors (const std::vector< llvm::Value * > &) |

| Implementation of the intrinsic call to interleave_vectors. | |

| llvm::Function * | get_llvm_intrin (const Type &ret_type, const std::string &name, const std::vector< Type > &arg_types, bool scalars_are_vectors=false) |

| Get an LLVM intrinsic declaration. | |

| llvm::Function * | get_llvm_intrin (llvm::Type *ret_type, const std::string &name, const std::vector< llvm::Type * > &arg_types) |

| llvm::Function * | declare_intrin_overload (const std::string &name, const Type &ret_type, const std::string &impl_name, std::vector< Type > arg_types, bool scalars_are_vectors=false) |

| Declare an intrinsic function that participates in overload resolution. | |

| void | declare_intrin_overload (const std::string &name, const Type &ret_type, llvm::Function *impl, std::vector< Type > arg_types) |

| llvm::Value * | call_overloaded_intrin (const Type &result_type, const std::string &name, const std::vector< Expr > &args) |

| Call an overloaded intrinsic function. | |

| llvm::Value * | call_intrin (const Type &t, int intrin_lanes, const std::string &name, std::vector< Expr >) |

| Generate a call to a vector intrinsic or runtime inlined function. | |

| llvm::Value * | call_intrin (const Type &t, int intrin_lanes, llvm::Function *intrin, std::vector< Expr >) |

| llvm::Value * | call_intrin (const llvm::Type *t, int intrin_lanes, const std::string &name, std::vector< llvm::Value * >, bool scalable_vector_result=false, bool is_reduction=false) |

| llvm::Value * | call_intrin (const llvm::Type *t, int intrin_lanes, llvm::Function *intrin, std::vector< llvm::Value * >, bool is_reduction=false) |

| virtual llvm::Value * | slice_vector (llvm::Value *vec, int start, int extent) |

| Take a slice of lanes out of an llvm vector. | |

| virtual llvm::Value * | concat_vectors (const std::vector< llvm::Value * > &) |

| Concatenate a bunch of llvm vectors. | |

| virtual llvm::Value * | shuffle_vectors (llvm::Value *a, llvm::Value *b, const std::vector< int > &indices) |

| Create an LLVM shuffle vectors instruction. | |

| llvm::Value * | shuffle_vectors (llvm::Value *v, const std::vector< int > &indices) |

| Shorthand for shuffling a single vector. | |

| std::pair< llvm::Function *, int > | find_vector_runtime_function (const std::string &name, int lanes) |

| Go looking for a vector version of a runtime function. | |

| virtual bool | supports_atomic_add (const Type &t) const |

| virtual void | codegen_vector_reduce (const VectorReduce *op, const Expr &init) |

| Compile a horizontal reduction that starts with an explicit initial value. | |

| virtual bool | supports_call_as_float16 (const Call *op) const |

| Can we call this operation with float16 type? | |

| llvm::Value * | simple_call_intrin (const std::string &intrin, const std::vector< llvm::Value * > &args, llvm::Type *result_type) |

| call_intrin does far too much to be useful and generally breaks things when one has carefully set things up for a specific architecture. | |

| llvm::Value * | normalize_fixed_scalable_vector_type (llvm::Type *desired_type, llvm::Value *result) |

| Ensure that a vector value is either fixed or vscale depending to match desired_type. | |

| llvm::Value * | convert_fixed_or_scalable_vector_type (llvm::Value *arg, llvm::Type *desired_type) |

| Convert between two LLVM vectors of potentially different scalable/fixed and size. | |

| llvm::Value * | fixed_to_scalable_vector_type (llvm::Value *fixed) |

| Convert an LLVM fixed vector value to the corresponding vscale vector value. | |

| llvm::Value * | scalable_to_fixed_vector_type (llvm::Value *scalable) |

| Convert an LLVM vscale vector value to the corresponding fixed vector value. | |

| int | get_vector_num_elements (const llvm::Type *t) |

| Get number of vector elements, taking into account scalable vectors. | |

| llvm::Type * | get_vector_type (llvm::Type *, int n, VectorTypeConstraint type_constraint=VectorTypeConstraint::None) const |

| llvm::Constant * | get_splat (int lanes, llvm::Constant *value, VectorTypeConstraint type_constraint=VectorTypeConstraint::None) const |

| llvm::Value * | match_vector_type_scalable (llvm::Value *value, VectorTypeConstraint constraint) |

| Make sure a value type has the same scalable/fixed vector type as a guide. | |

| llvm::Value * | match_vector_type_scalable (llvm::Value *value, llvm::Type *guide) |

| llvm::Value * | match_vector_type_scalable (llvm::Value *value, llvm::Value *guide) |

| bool | try_vector_predication_comparison (const std::string &name, const Type &result_type, MaskVariant mask, llvm::Value *a, llvm::Value *b, const char *cmp_op) |

| Generate a vector predicated comparison intrinsic call if use_llvm_vp_intrinsics is true and result_type is a vector type. | |

| bool | try_vector_predication_intrinsic (const std::string &name, VPResultType result_type, int32_t length, MaskVariant mask, std::vector< VPArg > args) |

| Generate an intrisic call if use_llvm_vp_intrinsics is true and length is greater than 1. | |

| llvm::Value * | codegen_dense_vector_load (const Load *load, llvm::Value *vpred=nullptr, bool slice_to_native=true) |

| Generate a basic dense vector load, with an optional predicate and control over whether or not we should slice the load into native vectors. | |

| virtual void | visit (const Fork *) |

| virtual void | visit (const Acquire *) |

| virtual void | visit (const HoistedStorage *) |

Protected Attributes | |

| std::unique_ptr< llvm::Module > | module |

| llvm::Function * | function = nullptr |

| llvm::LLVMContext * | context = nullptr |

| std::unique_ptr< llvm::IRBuilder< llvm::ConstantFolder, llvm::IRBuilderDefaultInserter > > | builder |

| llvm::Value * | value = nullptr |

| llvm::MDNode * | very_likely_branch = nullptr |

| llvm::MDNode * | fast_fp_math_md = nullptr |

| llvm::MDNode * | strict_fp_math_md = nullptr |

| std::vector< LoweredArgument > | current_function_args |

| bool | in_strict_float = false |

| bool | any_strict_float = false |

| Halide::Target | target |

| The target we're generating code for. | |

| llvm::Type * | void_t = nullptr |

| Some useful llvm types. | |

| llvm::Type * | i1_t = nullptr |

| llvm::Type * | i8_t = nullptr |

| llvm::Type * | i16_t = nullptr |

| llvm::Type * | i32_t = nullptr |

| llvm::Type * | i64_t = nullptr |

| llvm::Type * | f16_t = nullptr |

| llvm::Type * | f32_t = nullptr |

| llvm::Type * | f64_t = nullptr |

| llvm::PointerType * | ptr_t = nullptr |

| llvm::StructType * | halide_buffer_t_type = nullptr |

| llvm::StructType * | type_t_type |

| llvm::StructType * | dimension_t_type |

| llvm::StructType * | metadata_t_type = nullptr |

| llvm::StructType * | argument_t_type = nullptr |

| llvm::StructType * | scalar_value_t_type = nullptr |

| llvm::StructType * | device_interface_t_type = nullptr |

| llvm::StructType * | pseudostack_slot_t_type = nullptr |

| llvm::StructType * | semaphore_t_type |

| Expr | wild_u1x_ |

| Some wildcard variables used for peephole optimizations in subclasses. | |

| Expr | wild_i8x_ |

| Expr | wild_u8x_ |

| Expr | wild_i16x_ |

| Expr | wild_u16x_ |

| Expr | wild_i32x_ |

| Expr | wild_u32x_ |

| Expr | wild_i64x_ |

| Expr | wild_u64x_ |

| Expr | wild_f32x_ |

| Expr | wild_f64x_ |

| Expr | wild_u1_ |

| Expr | wild_i8_ |

| Expr | wild_u8_ |

| Expr | wild_i16_ |

| Expr | wild_u16_ |

| Expr | wild_i32_ |

| Expr | wild_u32_ |

| Expr | wild_i64_ |

| Expr | wild_u64_ |

| Expr | wild_f32_ |

| Expr | wild_f64_ |

| size_t | requested_alloca_total = 0 |

| A (very) conservative guess at the size of all alloca() storage requested (including alignment padding). | |

| std::map< std::string, std::vector< Intrinsic > > | intrinsics |

| Mapping of intrinsic functions to the various overloads implementing it. | |

| bool | inside_atomic_mutex_node = false |

| Are we inside an atomic node that uses mutex locks? | |

| bool | emit_atomic_stores = false |

| Emit atomic store instructions? | |

| bool | use_llvm_vp_intrinsics = false |

| Controls use of vector predicated intrinsics for vector operations. | |

| std::map< WarningKind, std::string > | onetime_warnings |

Detailed Description

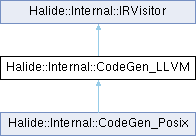

A code generator abstract base class.

Actual code generators (e.g. CodeGen_X86) inherit from this. This class is responsible for taking a Halide Stmt and producing llvm bitcode, machine code in an object file, or machine code accessible through a function pointer.

Definition at line 60 of file CodeGen_LLVM.h.

Member Typedef Documentation

◆ MaskVariant

|

protected |

Predication mask using the above two types for special cases and an llvm::Value for the general one.

Definition at line 642 of file CodeGen_LLVM.h.

Member Enumeration Documentation

◆ DestructorType

|

protected |

Some destructors should always be called.

Others should only be called if the pipeline is exiting with an error code.

| Enumerator | |

|---|---|

| Always | |

| OnError | |

| OnSuccess | |

Definition at line 271 of file CodeGen_LLVM.h.

◆ VectorTypeConstraint

|

strongprotected |

Interface to abstract vector code generation as LLVM is now providing multiple options to express even simple vector operations.

Specifically traditional fixed length vectors, vscale based variable length vectors, and the vector predicate based approach where an explict length is passed with each instruction.

| Enumerator | |

|---|---|

| None | |

| Fixed | Use default for current target. |

| VScale | Force use of fixed size vectors. |

Definition at line 595 of file CodeGen_LLVM.h.

◆ WarningKind

|

strongprotected |

Warning messages which we want to avoid displaying number of times.

| Enumerator | |

|---|---|

| EmulatedFloat16 | |

Definition at line 679 of file CodeGen_LLVM.h.

Constructor & Destructor Documentation

◆ CodeGen_LLVM()

|

protected |

Member Function Documentation

◆ new_for_target()

|

static |

Create an instance of CodeGen_LLVM suitable for the target.

◆ compile()

|

virtual |

◆ get_target()

|

inline |

The target we're generating code for.

Definition at line 69 of file CodeGen_LLVM.h.

References target.

◆ set_context()

| void Halide::Internal::CodeGen_LLVM::set_context | ( | llvm::LLVMContext & | context | ) |

Tell the code generator which LLVM context to use.

References context.

◆ initialize_llvm()

|

static |

Initialize internal llvm state for the enabled targets.

◆ compile_trampolines()

|

static |

◆ get_requested_alloca_total()

|

inline |

Definition at line 85 of file CodeGen_LLVM.h.

References requested_alloca_total.

◆ compile_func()

|

protectedvirtual |

Compile a specific halide declaration into the llvm Module.

◆ compile_buffer()

|

protectedvirtual |

◆ begin_func()

|

protectedvirtual |

Helper functions for compiling Halide functions to llvm functions.

begin_func performs all the work necessary to begin generating code for a function with a given argument list with the IRBuilder. A call to begin_func should be a followed by a call to end_func with the same arguments, to generate the appropriate cleanup code.

◆ end_func()

|

protectedvirtual |

◆ mcpu_target()

|

protectedpure virtual |

What should be passed as -mcpu (warning: implies attrs!), -mattrs, and related for compilation.

The architecture-specific code generator should define these.

mcpu_target() - target this specific CPU, in the sense of the allowed ISA sets and the CPU-specific tuning/assembly instruction scheduling.

mcpu_tune() - expect that we will be running on this specific CPU, so perform CPU-specific tuning/assembly instruction scheduling, but DON'T sacrifice the portability, support running on other CPUs, only make use of the ISAs that are enabled by mcpu_target()+mattrs().

◆ mcpu_tune()

|

protectedpure virtual |

◆ mattrs()

|

protectedpure virtual |

◆ mabi()

|

protectedvirtual |

◆ use_soft_float_abi()

|

protectedpure virtual |

◆ use_pic()

|

protectedvirtual |

◆ promote_indices()

|

inlineprotectedvirtual |

Should indexing math be promoted to 64-bit on platforms with 64-bit pointers?

Definition at line 133 of file CodeGen_LLVM.h.

◆ native_vector_bits()

|

protectedpure virtual |

What's the natural vector bit-width to use for loads, stores, etc.

Referenced by maximum_vector_bits().

◆ maximum_vector_bits()

|

inlineprotectedvirtual |

Used to decide whether to break a vector up into multiple smaller operations.

This is the largest size the architecture supports.

Definition at line 142 of file CodeGen_LLVM.h.

References native_vector_bits().

◆ target_vscale()

|

inlineprotectedvirtual |

For architectures that have vscale vectors, return the constant vscale to use.

Default of 0 means do not use vscale vectors. Generally will depend on the target flags and vector_bits settings.

Definition at line 149 of file CodeGen_LLVM.h.

◆ upgrade_type_for_arithmetic()

|

protectedvirtual |

Return the type in which arithmetic should be done for the given storage type.

References Halide::Internal::Type.

◆ upgrade_type_for_storage()

|

protectedvirtual |

Return the type that a given Halide type should be stored/loaded from memory as.

References Halide::Internal::Type.

◆ upgrade_type_for_argument_passing()

|

protectedvirtual |

Return the type that a Halide type should be passed in and out of functions as.

References Halide::Internal::Type.

◆ set_fast_fp_math()

|

protected |

Change floating-point math op emission to use fast flags.

◆ set_strict_fp_math()

|

protected |

Change floating-point math op emission to use strict flags.

◆ init_context()

|

protectedvirtual |

Grab all the context specific internal state.

◆ init_module()

|

protectedvirtual |

Initialize the CodeGen_LLVM internal state to compile a fresh module.

This allows reuse of one CodeGen_LLVM object to compiled multiple related modules (e.g. multiple device kernels).

◆ optimize_module()

|

protected |

Run all of llvm's optimization passes on the module.

◆ sym_push()

|

protected |

◆ sym_pop()

|

protected |

Remove an entry for the symbol table, revealing any previous entries with the same name.

Call this when values go out of scope.

◆ sym_get()

|

protected |

Fetch an entry from the symbol table.

If the symbol is not found, it either errors out (if the second arg is true), or returns nullptr.

◆ sym_exists()

|

protected |

Test if an item exists in the symbol table.

◆ signature_to_type()

|

protected |

Given a Halide ExternSignature, return the equivalent llvm::FunctionType.

◆ codegen() [1/2]

|

protected |

Emit code that evaluates an expression, and return the llvm representation of the result of the expression.

◆ codegen() [2/2]

|

protected |

Emit code that runs a statement.

◆ scalarize()

|

protected |

Codegen a vector Expr by codegenning each lane and combining.

◆ register_destructor()

|

protected |

◆ trigger_destructor()

|

protected |

Call a destructor early.

Pass in the value returned by register destructor.

◆ get_destructor_block()

|

protected |

Retrieves the block containing the error handling code.

Creates it if it doesn't already exist for this function.

◆ create_assertion()

|

protected |

Codegen an assertion.

If false, returns the error code (if not null), or evaluates and returns the message, which must be an Int(32) expression.

◆ codegen_asserts()

|

protected |

Codegen a block of asserts with pure conditions.

◆ return_with_error_code()

|

protected |

Return the the pipeline with the given error code.

Will run the destructor block.

◆ create_string_constant()

|

protected |

Put a string constant in the module as a global variable and return a pointer to it.

◆ create_binary_blob()

|

protected |

Put a binary blob in the module as a global variable and return a pointer to it.

◆ create_broadcast()

|

protected |

Widen an llvm scalar into an llvm vector with the given number of lanes.

◆ codegen_buffer_pointer() [1/4]

|

protected |

Generate a pointer into a named buffer at a given index, of a given type.

The index counts according to the scalar type of the type passed in.

References Halide::Internal::Type.

◆ codegen_buffer_pointer() [2/4]

|

protected |

References Halide::Internal::Type.

◆ codegen_buffer_pointer() [3/4]

|

protected |

References Halide::Internal::Type.

◆ codegen_buffer_pointer() [4/4]

|

protected |

References Halide::Internal::Type.

◆ mangle_llvm_type()

|

protected |

Return type string for LLVM type using LLVM IR intrinsic type mangling.

E.g. ".i32 or ".f32" for scalars, ".p0" for pointers, ".nxv4i32" for a scalable vector of four 32-bit integers, or ".v4f32" for a fixed vector of four 32-bit floats. The dot is included in the result.

◆ make_halide_type_t()

|

protected |

Turn a Halide Type into an llvm::Value representing a constant halide_type_t.

References Halide::Internal::Type.

◆ add_tbaa_metadata()

|

protected |

Mark a load or store with type-based-alias-analysis metadata so that llvm knows it can reorder loads and stores across different buffers.

◆ get_allocation_name()

|

inlineprotectedvirtual |

Get a unique name for the actual block of memory that an allocate node uses.

Used so that alias analysis understands when multiple Allocate nodes shared the same memory.

Reimplemented in Halide::Internal::CodeGen_Posix.

Definition at line 347 of file CodeGen_LLVM.h.

◆ function_does_not_access_memory()

|

protected |

Add the appropriate function attribute to tell LLVM that the function doesn't access memory.

References Halide::Internal::IRVisitor::visit().

◆ visit() [1/48]

|

overrideprotectedvirtual |

Generate code for various IR nodes.

These can be overridden by architecture-specific code to perform peephole optimizations. The result of each is stored in value

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

Referenced by Halide::Internal::CodeGen_Posix::CodeGen_Posix().

◆ visit() [2/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [3/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [4/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [5/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [6/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [7/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [8/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [9/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [10/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [11/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [12/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [13/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [14/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [15/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [16/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [17/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [18/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [19/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [20/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [21/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [22/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [23/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [24/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [25/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [26/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [27/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [28/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [29/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [30/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [31/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [32/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [33/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [34/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [35/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [36/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [37/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [38/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [39/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [40/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [41/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [42/48]

|

overrideprotectedpure virtual |

Generate code for an allocate node.

It has no default implementation - it must be handled in an architecture-specific way.

Reimplemented from Halide::Internal::IRVisitor.

Implemented in Halide::Internal::CodeGen_Posix.

◆ visit() [43/48]

|

overrideprotectedpure virtual |

Generate code for a free node.

It has no default implementation and must be handled in an architecture-specific way.

Reimplemented from Halide::Internal::IRVisitor.

Implemented in Halide::Internal::CodeGen_Posix.

◆ visit() [44/48]

|

overrideprotectedvirtual |

These IR nodes should have been removed during lowering.

CodeGen_LLVM will error out if they are present

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [45/48]

|

overrideprotectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ llvm_type_of() [1/2]

|

protectedvirtual |

Get the llvm type equivalent to the given halide type in the current context.

References Halide::Internal::Type.

◆ llvm_type_of() [2/2]

|

protected |

Get the llvm type equivalent to a given halide type.

If effective_vscale is nonzero and the type is a vector type with lanes a multiple of effective_vscale, a scalable vector type is generated with total lanes divided by effective_vscale. That is a scalable vector intended to be used with a fixed vscale of effective_vscale.

References context.

◆ create_alloca_at_entry()

|

protected |

Perform an alloca at the function entrypoint.

Will be cleaned on function exit.

◆ get_user_context()

|

protected |

The user_context argument.

May be a constant null if the function is being compiled without a user context.

◆ interleave_vectors()

|

protectedvirtual |

Implementation of the intrinsic call to interleave_vectors.

This implementation allows for interleaving an arbitrary number of vectors.

◆ get_llvm_intrin() [1/2]

|

protected |

Get an LLVM intrinsic declaration.

If it doesn't exist, it will be created.

References Halide::Internal::Type.

◆ get_llvm_intrin() [2/2]

|

protected |

◆ declare_intrin_overload() [1/2]

|

protected |

Declare an intrinsic function that participates in overload resolution.

References Halide::Internal::Type.

◆ declare_intrin_overload() [2/2]

|

protected |

References Halide::Internal::Type.

◆ call_overloaded_intrin()

|

protected |

Call an overloaded intrinsic function.

Returns nullptr if no suitable overload is found.

References Halide::Internal::Type.

◆ call_intrin() [1/4]

|

protected |

Generate a call to a vector intrinsic or runtime inlined function.

The arguments are sliced up into vectors of the width given by 'intrin_lanes', the intrinsic is called on each piece, then the results (if any) are concatenated back together into the original type 't'. For the version that takes an llvm::Type *, the type may be void, so the vector width of the arguments must be specified explicitly as 'called_lanes'.

References Halide::Internal::Type.

◆ call_intrin() [2/4]

|

protected |

References Halide::Internal::Type.

◆ call_intrin() [3/4]

|

protected |

◆ call_intrin() [4/4]

|

protected |

◆ slice_vector()

|

protectedvirtual |

Take a slice of lanes out of an llvm vector.

Pads with undefs if you ask for more lanes than the vector has.

◆ concat_vectors()

|

protectedvirtual |

Concatenate a bunch of llvm vectors.

Must be of the same type.

◆ shuffle_vectors() [1/2]

|

protectedvirtual |

Create an LLVM shuffle vectors instruction.

Takes a combination of fixed or scalable vectors as input, so long as the effective lengths match, but always returns a fixed vector.

◆ shuffle_vectors() [2/2]

|

protected |

Shorthand for shuffling a single vector.

◆ find_vector_runtime_function()

|

protected |

Go looking for a vector version of a runtime function.

Will return the best match. Matches in the following order:

1) The requested vector width.

2) The width which is the smallest power of two greater than or equal to the vector width.

3) All the factors of 2) greater than one, in decreasing order.

4) The smallest power of two not yet tried.

So for a 5-wide vector, it tries: 5, 8, 4, 2, 16.

If there's no match, returns (nullptr, 0).

◆ supports_atomic_add()

|

protectedvirtual |

References Halide::Internal::Type.

◆ codegen_vector_reduce()

|

protectedvirtual |

Compile a horizontal reduction that starts with an explicit initial value.

There are lots of complex ways to peephole optimize this pattern, especially with the proliferation of dot-product instructions, and they can usefully share logic across backends.

◆ supports_call_as_float16()

|

protectedvirtual |

Can we call this operation with float16 type?

This is used to avoid "emulated" equivalent code-gen in case target has FP16 feature

◆ simple_call_intrin()

|

protected |

call_intrin does far too much to be useful and generally breaks things when one has carefully set things up for a specific architecture.

This just does the bare minimum. call_intrin should be refactored and could call this, possibly with renaming of the methods.

◆ normalize_fixed_scalable_vector_type()

|

protected |

Ensure that a vector value is either fixed or vscale depending to match desired_type.

◆ convert_fixed_or_scalable_vector_type()

|

protected |

Convert between two LLVM vectors of potentially different scalable/fixed and size.

Used to handle converting to/from fixed vectors that are smaller than the minimum size scalable vector.

◆ fixed_to_scalable_vector_type()

|

protected |

Convert an LLVM fixed vector value to the corresponding vscale vector value.

◆ scalable_to_fixed_vector_type()

|

protected |

Convert an LLVM vscale vector value to the corresponding fixed vector value.

◆ get_vector_num_elements()

|

protected |

Get number of vector elements, taking into account scalable vectors.

Returns 1 for scalars.

◆ get_vector_type()

|

protected |

References None.

◆ get_splat()

|

protected |

◆ match_vector_type_scalable() [1/3]

|

protected |

Make sure a value type has the same scalable/fixed vector type as a guide.

References value.

◆ match_vector_type_scalable() [2/3]

|

protected |

References value.

◆ match_vector_type_scalable() [3/3]

|

protected |

References value.

◆ try_vector_predication_comparison()

|

protected |

Generate a vector predicated comparison intrinsic call if use_llvm_vp_intrinsics is true and result_type is a vector type.

If generated, assigns result of vp intrinsic to value and returns true if it an instuction is generated, otherwise returns false.

References Halide::Internal::Type.

◆ try_vector_predication_intrinsic()

|

protected |

Generate an intrisic call if use_llvm_vp_intrinsics is true and length is greater than 1.

If generated, assigns result of vp intrinsic to value and returns true if it an instuction is generated, otherwise returns false.

◆ codegen_dense_vector_load()

|

protected |

Generate a basic dense vector load, with an optional predicate and control over whether or not we should slice the load into native vectors.

Used by CodeGen_ARM to help with vld2/3/4 emission.

◆ visit() [46/48]

|

protectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [47/48]

|

protectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

◆ visit() [48/48]

|

protectedvirtual |

Reimplemented from Halide::Internal::IRVisitor.

Reimplemented in Halide::Internal::CodeGen_Posix.

Member Data Documentation

◆ module

|

protected |

Definition at line 165 of file CodeGen_LLVM.h.

Referenced by compile().

◆ function

|

protected |

Definition at line 166 of file CodeGen_LLVM.h.

◆ context

|

protected |

Definition at line 167 of file CodeGen_LLVM.h.

Referenced by compile_trampolines(), llvm_type_of(), new_for_target(), and set_context().

◆ builder

|

protected |

Definition at line 168 of file CodeGen_LLVM.h.

◆ value

|

protected |

Definition at line 169 of file CodeGen_LLVM.h.

Referenced by get_splat(), match_vector_type_scalable(), match_vector_type_scalable(), match_vector_type_scalable(), and sym_push().

◆ very_likely_branch

|

protected |

Definition at line 170 of file CodeGen_LLVM.h.

◆ fast_fp_math_md

|

protected |

Definition at line 171 of file CodeGen_LLVM.h.

◆ strict_fp_math_md

|

protected |

Definition at line 172 of file CodeGen_LLVM.h.

◆ current_function_args

|

protected |

Definition at line 173 of file CodeGen_LLVM.h.

◆ in_strict_float

|

protected |

Definition at line 175 of file CodeGen_LLVM.h.

◆ any_strict_float

|

protected |

Definition at line 176 of file CodeGen_LLVM.h.

◆ target

|

protected |

The target we're generating code for.

Definition at line 197 of file CodeGen_LLVM.h.

Referenced by compile_trampolines(), get_target(), and new_for_target().

◆ void_t

|

protected |

Some useful llvm types.

Definition at line 232 of file CodeGen_LLVM.h.

◆ i1_t

|

protected |

Definition at line 232 of file CodeGen_LLVM.h.

◆ i8_t

|

protected |

Definition at line 232 of file CodeGen_LLVM.h.

◆ i16_t

|

protected |

Definition at line 232 of file CodeGen_LLVM.h.

◆ i32_t

|

protected |

Definition at line 232 of file CodeGen_LLVM.h.

◆ i64_t

|

protected |

Definition at line 232 of file CodeGen_LLVM.h.

◆ f16_t

|

protected |

Definition at line 232 of file CodeGen_LLVM.h.

◆ f32_t

|

protected |

Definition at line 232 of file CodeGen_LLVM.h.

◆ f64_t

|

protected |

Definition at line 232 of file CodeGen_LLVM.h.

◆ ptr_t

|

protected |

Definition at line 233 of file CodeGen_LLVM.h.

◆ halide_buffer_t_type

|

protected |

Definition at line 234 of file CodeGen_LLVM.h.

◆ type_t_type

|

protected |

Definition at line 235 of file CodeGen_LLVM.h.

◆ dimension_t_type

|

protected |

Definition at line 236 of file CodeGen_LLVM.h.

◆ metadata_t_type

|

protected |

Definition at line 237 of file CodeGen_LLVM.h.

◆ argument_t_type

|

protected |

Definition at line 238 of file CodeGen_LLVM.h.

◆ scalar_value_t_type

|

protected |

Definition at line 239 of file CodeGen_LLVM.h.

◆ device_interface_t_type

|

protected |

Definition at line 240 of file CodeGen_LLVM.h.

◆ pseudostack_slot_t_type

|

protected |

Definition at line 241 of file CodeGen_LLVM.h.

◆ semaphore_t_type

|

protected |

Definition at line 242 of file CodeGen_LLVM.h.

◆ wild_u1x_

|

protected |

Some wildcard variables used for peephole optimizations in subclasses.

Definition at line 249 of file CodeGen_LLVM.h.

◆ wild_i8x_

|

protected |

Definition at line 249 of file CodeGen_LLVM.h.

◆ wild_u8x_

|

protected |

Definition at line 249 of file CodeGen_LLVM.h.

◆ wild_i16x_

|

protected |

Definition at line 249 of file CodeGen_LLVM.h.

◆ wild_u16x_

|

protected |

Definition at line 249 of file CodeGen_LLVM.h.

◆ wild_i32x_

|

protected |

Definition at line 250 of file CodeGen_LLVM.h.

◆ wild_u32x_

|

protected |

Definition at line 250 of file CodeGen_LLVM.h.

◆ wild_i64x_

|

protected |

Definition at line 250 of file CodeGen_LLVM.h.

◆ wild_u64x_

|

protected |

Definition at line 250 of file CodeGen_LLVM.h.

◆ wild_f32x_

|

protected |

Definition at line 251 of file CodeGen_LLVM.h.

◆ wild_f64x_

|

protected |

Definition at line 251 of file CodeGen_LLVM.h.

◆ wild_u1_

|

protected |

Definition at line 254 of file CodeGen_LLVM.h.

◆ wild_i8_

|

protected |

Definition at line 254 of file CodeGen_LLVM.h.

◆ wild_u8_

|

protected |

Definition at line 254 of file CodeGen_LLVM.h.

◆ wild_i16_

|

protected |

Definition at line 254 of file CodeGen_LLVM.h.

◆ wild_u16_

|

protected |

Definition at line 254 of file CodeGen_LLVM.h.

◆ wild_i32_

|

protected |

Definition at line 255 of file CodeGen_LLVM.h.

◆ wild_u32_

|

protected |

Definition at line 255 of file CodeGen_LLVM.h.

◆ wild_i64_

|

protected |

Definition at line 255 of file CodeGen_LLVM.h.

◆ wild_u64_

|

protected |

Definition at line 255 of file CodeGen_LLVM.h.

◆ wild_f32_

|

protected |

Definition at line 256 of file CodeGen_LLVM.h.

◆ wild_f64_

|

protected |

Definition at line 256 of file CodeGen_LLVM.h.

◆ requested_alloca_total

|

protected |

A (very) conservative guess at the size of all alloca() storage requested (including alignment padding).

It's currently meant only to be used as a very coarse way to ensure there is enough stack space when testing on the WebAssembly backend.

It is not meant to be a useful proxy for "stack space needed", for a number of reasons:

- allocas with non-overlapping lifetimes will share space

- on some backends, LLVM may promote register-sized allocas into registers

- while this accounts for alloca() calls we know about, it doesn't attempt to account for stack spills, function call overhead, etc.

Definition at line 452 of file CodeGen_LLVM.h.

Referenced by get_requested_alloca_total().

◆ intrinsics

|

protected |

Mapping of intrinsic functions to the various overloads implementing it.

Definition at line 476 of file CodeGen_LLVM.h.

◆ inside_atomic_mutex_node

|

protected |

Are we inside an atomic node that uses mutex locks?

This is used for detecting deadlocks from nested atomics & illegal vectorization.

Definition at line 552 of file CodeGen_LLVM.h.

◆ emit_atomic_stores

|

protected |

Emit atomic store instructions?

Definition at line 555 of file CodeGen_LLVM.h.

◆ use_llvm_vp_intrinsics

|

protected |

Controls use of vector predicated intrinsics for vector operations.

Will be set by certain backends (e.g. RISC V) to control codegen.

Definition at line 670 of file CodeGen_LLVM.h.

◆ onetime_warnings

|

protected |

Definition at line 682 of file CodeGen_LLVM.h.

The documentation for this class was generated from the following file:

- src/CodeGen_LLVM.h